I encountered this blog post which analyzes the emotional journey of War and Peace using R. Check it out, it’s pretty nifty. I was immediately intrigued by the dataset, which pulls key sentences out of each chapter, and assigns a “sentiment score” to each of them. I would like to learn to do this!

I’ve turned to a few of my trusty friends:

- Python, which you should be seeing a lot of in this blog.

- The Velveteen Rabbit by Margery Williams, easily my favorite short story. It’s also in the public domain. Handy!

One of my many bad habits is to try to reinvent the wheel. So, rather than try to code a sentiment analysis myself, I turned to the great wide web to see if someone else has already done this. I settled on the python package TextBlob, which, as it says on its front page, “stands on the shoulders of NLTK and pattern.”

I’m immediately intrigued by all of the nifty tools that are the three packages provide between them. In addition to sentiment analysis, there’s “noun phrase extraction”, stemming (a useful tool for machine learning!!), and synset similarity (e.g. “cat” and “dog” have the most common ancestor of “carnivore” and “cat” has a similarity score of “0.86” with “dog” and 0.17 with the word “box”).

I’m going to have to play with these. Yes, yes I am.

For now, though, we’re sticking with sentiment analysis. I want to get a feel for what the different algorithms do, how accurate I think they are, and how fast they are.

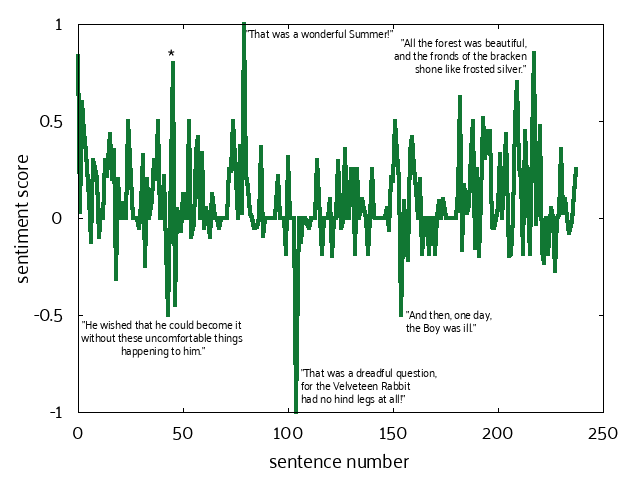

I downloaded the text for The Velveteen Rabbit from archive.org and manually extracted the core text of the story. TextBlob makes it really easy to break a giant hunk of text up into paragraphs and sentences. I fed each sentence through the TextBlob sentiment analyzer (which defaults to the method used by pattern) and found the graph to be a bit too noisy for my taste.

Some sentences also didn’t seem to match the score particularly well, for instance: “Sometimes she took no notice of the playthings lying about, and sometimes, for no reason whatever, she went swooping about like a great wind and hustled them away in cupboards,” scored a +0.8 (marked with a * in the plot). Nana is terrifying. Why is this positive?

Sentiment score as assigned by pattern over each sentence of The Velveteen Rabbit.

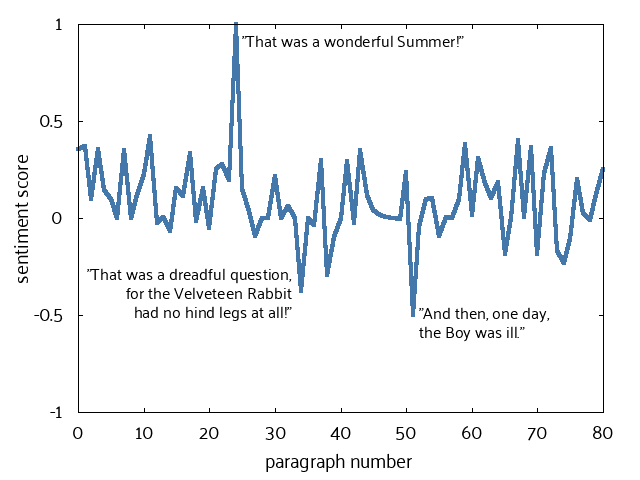

I got a much more consistent picture by breaking up the data into paragraphs, which makes sense as I’m essentially averaging over all of the sentiment within a paragraph. The highest point (“That was a wonderful summer!”) and lowest point (“And then, one day, the Boy was ill.”) both make a lot of sense. I would count those as the highest and lowest part of the book. It doesn’t seem to capture some of the more dramatic moments, though.

The rabbit is crying on top of the garbage sack ready to be burned in the morning, and cries a tear?? That paragraph gets a piddly score of +0.18. I also expected there to be a clearer up-down-up pattern within the data like in the War and Peace post, but alas, that’s probably just wishful thinking at this point. The Velveteen Rabbit just doesn’t have the data that War and Peace does.

Sentiment score as assigned by pattern over each paragraph of The Velveteen Rabbit.

WELL, I say to myself, that was only one data analyzer… What happens when we use the NLTK default sentiment analyzer? This doesn’t just compare words to a default database of positive and negative words, it compares the whole text to a database of… movie ratings! Wow, what a perfect knowledge set! You get ranked scores of things combined with words that you want to use… This is the sort of super-smart thinking that I admire in other people.

What other datasets might be good for this? Facebook comes to mind, especially since they added the variety of “reactions” to certain posts. That data is never going to get out into the wild, though, so perhaps we should think of some other things. Something to put on the back burner for now, methinks.

The first thing I notice trying to use the NLTK sentiment analyzer is, “HOLY CRAP THIS THING IS SO SLOW.” I hope it’s worth the effort! It had better deliver much more accurate sentiments than the pattern analyzer. Using the handy-dandy timeit module to analyze the longest paragraph, I find that it takes pattern ~3ms to analyze and NLTK a whopping 6s. That makes pattern 2000 times faster than NLTK.

So how did it do? See for yourself:

![sentiment score vs sentence number A graph of sentiment score vs sentence number. The y-axis is scaled from -1 to 1. The x-axis has a range between 0 and 250. The overall pattern of the graph is wildly oscillatory with quotes printed at various points. "[The Boy] made nice tunnels for him under the bedclothes." at 60, -0.9. "[H]e saw two strange beings creep out of the tall bracken near him." at 75, 1. "[A]nd before he thought what he was doing he lifted his hind toe to scratch it." at 225, -0.95.](http://realerthinks.com/wordpress/wp-content/uploads/2016/12/sentiments_sent_bayes.png)

Sentiment score as assigned by NLTK over each sentence of The Velveteen Rabbit.

![sentiment score vs paragraph number A graph of sentiment score vs paragraph number. The y-axis is scaled from -1 to 1. The x-axis has a range between 0 and 80. The overall pattern of the graph is wildly oscillatory with quotes printed at various points. "[H]e always made the Rabbit a little nest somewhere among the bracken." at 25, 1. "It's as easy as anything!" at 30, -0.95. "[H]e wrinkled his nose suddenly and flattened his ears." at 42, -0.75.](http://realerthinks.com/wordpress/wp-content/uploads/2016/12/sentiments_par_bayes.png)

Sentiment score as assigned by NLTK over each paragraph of The Velveteen Rabbit.

Only 5 paragraphs differed more than 1 point between the two analysis methods. The first 4 I would have personally classified as “neutral” and had a bit of trouble even coming to that conclusion, so I can’t really blame either of the algorithms for being off from each other.

The second-to-last paragraph, seems pretty spot-on:

Autumn passed and Winter, and in the Spring, when the days grew warm and sunny, the Boy went out to play in the wood behind the house. And while he was playing, two rabbits crept out from the bracken and peeped at him. One of them was brown all over, but the other had strange markings under his fur, as though long ago he had been spotted, and the spots still showed through. And about his little soft nose and his round black eyes there was something familiar, so that the Boy thought to himself: “Why, he looks just like my old Bunny that was lost when I had scarlet fever!”

Pattern gave this passage a -0.01, while NLTK gave it a whopping +1.0. I think this is the most important passage in the story, and it it’s what makes the whole story so sweet and special. For this to be anything other than +1 is just wrong. To be fair, the context is the important part here, and we’re definitely not at a place where an AI will pick that up. Pattern is probably right to keep this as a fairly neutral paragraph.

That being said, the aforementioned paragraph where the rabbit is waiting to be burned the next day and starts crying… pattern gave that a +0.18 where NLTK gave it a… +1.0. THIS IS THE SADDEST PARAGRAPH IN HISTORY. Nonono it is not a +1. It’s a -1million.

Moral of the story: capturing nuance is HARD. Neither captured the spine-tingling sadness of Bunny’s plight, and I’m pretty sure that NLTK only got the warm fuzzies of the ending by chance. I’ll have to spend some time looking into other tools to see if I like them any better. Alternatively, I could find some better training sets for NLTK. Rather than movie reviews, what if it were trained on actual literature?

I like that pattern seems to err on the side of neutrality rather than definitive. It also, and I can’t stress this enough, runs 2000x faster. As much as I wanted to like the NLTK algorithm, I just can’t support it with the data I have. Given the choice between the two, I have to begrudgingly choose pattern.